Storage is a fundamental part of working with VMware vSphere and all related VMware products. Virtual machines (VMs), VM Templates, ISO and bundled OVA/OVF VM installation files, troubleshooting logs, high availability, and more depend on getting VMware storage right. There are several storage options available, each with its pros and cons. Here we will explore the history, options, best practices, and key variables to consider for VMware storage.

Part of the decision-making process may depend on whether there is already an existing solution in place in your organization, and the availability of funds to purchase third-party solutions that may be a better fit for specific needs. Even if there is an embedded solution in use already, there may be options available that can make utilization of that resource more efficient or more reliable.

The evolution VMware Storage

VMware began developing its virtualization products in 1998, and first released products to the public in 2001. At this time, computing was understandably quite different than today, although the basic building blocks were similar. Storage back then offered limited options (in both size and type).

Since the early 2000s, server hardware has evolved significantly. With faster CPUs and more RAM, an individual physical VMware server (known as a “host”) can support more VMs than ever. At the same time, the performance and density of storage devices (hard disks, flash drives, networked storage, etc.) has increased to enable more data to be saved and higher I/O throughput.

For example, solid-state drives (SSDs) — which contain integrated circuit chips instead of spinning platters — far outstrip the performance of hard disk drives (HDDs). Today, it is not uncommon to have a vCenter running thousands of VMs, with storage measured in Petabytes. Understanding the types of storage supported by VMware and the relative cost of each solution is the key to making wise, cost-effective design decisions for a VMware environment.

VMware Storage at a Glance

To understand the current state of VMware storage, let’s start with a breakdown of storage types and their use cases.

| Storage Type | Location | Options | Use Cases |

|---|---|---|---|

| Block-Local | Server/Host | Spinning Disk/HDDs | Smaller installations with VMs that will not need to move between ESXi hosts frequently. |

| Block-Local | Server/Host | SSD/Flash/NVMe | Smaller installations with VMs that do not need to move between ESXi hosts frequently, but require better I/O performance than HDDs. |

| Block-VSAN | Server/Host | Disk or Flash | Most common in Hyper-Converged Systems |

| Block-SAN | FC, FCoE or iSCSI Storage Array | Spinning Disk | Enterprise systems that require high fault tolerance and can justify the cost premium. |

| Block-SAN | FC, FCoE or iSCSI Storage Array | SSD/Flash/NVMe or hybrid | Enterprise systems that require high fault tolerance & fast I/O performance, and can justify the cost premium. |

| NFS(NAS) File-based | Networked Server/”Filer” | Spinning Disk, SSD/Flash/NVMe or hybrid | The cost of a SAN Storage Array cannot be justified, a NAS device is already owned, and the multi-function capabilities of a NAS Filer are required. |

| vVol | Any SAN/NAS array that supports vVol objects | Spinning Disk, SSD/Flash/NVMe or hybrid | Organizations willing to meet the requirements for vVols and desiring an object-based storage model with more control in the hands of the vCenter administrator. |

💡Pro-tip: Always review the VMware Compatibility Guide when buying a storage device. The guide will confirm if a device supports a specific version of ESXi or vCenter.

VMware storage options: A deep dive

Now, let’s take a closer look at the popular VMware storage options.

Block Storage

“Block Storage” is a general term used to categorize data storage presented to an operating system as a “disk drive” containing “blocks” of data storage space. Initially, block storage was an actual physical hard drive. Today it is usually an abstracted logical “disk. The operating system is responsible for creating and managing a “file system” on this disk.

With VMware ESXi, a filesystem called a “VMFS Datastore” is created on the disk and formatted with either the VMFS 5 or 6 filesystem format before use.

Local Disk

“Local Disk” refers to disk space that consists of one or more devices directly attached to a storage controller in a server. Most servers have a SATA (Serial Advanced Technology Attachment), SCSI (Small Computer Systems Interface), and/or a SAS (Serial Attached SCSI) controller integrated with the system board or as a common option, and slots for HDDs/SSDs in the same or similar form factor.

Another option is an SSD on a circuit card, such as a SATA M.2 module, that attaches directly to the system board. Hybrid drives are another option. Hybrid drives have a spinning disk component plus a solid-state component. With hybrid drives, the solid-state component improves performance by caching reads or writes.

A newer and faster technology called NVMe (Non-Volatile Memory Express) is becoming more common. NVMe is not technically a storage type. Rather, it is a storage access protocol that supports solid-state storage directly attached to the CPU via a high-speed PCIe (Peripheral Component Interconnect Express) bus. Devices designed using NVMe can perform up to 7 times faster than SSD disks or non-NVMe M.2 modules. The main point to remember about these types of devices is “faster equals more expensive”. However, while NVMe is still relatively expensive, it is becoming more affordable.

An important feature to consider when using local storage is using some form of RAID (Redundant Array of Independent Disks). Various RAID types protect data by either mirroring the data to a second drive or using a calculated value called “parity,” which enables data reconstruction if a drive fails. In servers with a small number of local storage devices, the most common configuration is RAID 1, which mirrors one device to another.

Advantages of local storage:

- It is typically included with a server

- It is directly attached to the server, using SAS, SATA M.2, NVMe, and doesn’t require a SAN or high-speed Ethernet network.

- It is easy to set up and administer.

- Enterprise-Class servers support RAID for data protection, and hot-swapping of drives if one fails.

Disadvantages of local storage:

- Most servers or blades do not come with a large amount of local storage. With VMware it is usually used for the ESXi OS and possibly for low priority VMs.

- To move a VM to another ESXi host, the VM’s storage must be relocated to that host’s local storage. Local storage is not shared across ESXi hosts unless you’re using VSAN Software-Defined Storage (a licensed optional feature described later in this article).

Fibre Channel Storage Area Network (SAN)

Fibre Channel (FC) is a transport protocol optimized specifically for storage traffic. VMware has supported it since the first public release. Fibre Channel encapsulates SCSI commands in FC packets, and has logic to ensure lossless delivery. The speed of FC has increased over the years to as high as 128 Gbps, although the most common speeds for device connections are 8Gbps and 16Gbps. Fibre Channel devices connected to FC switches create a SAN (Storage Area Network).

Typically, there are two separate switches or sets of switches that are isolated from each other, called “fabrics”. Both servers and storage equipment are configured with connections to each fabric for fault tolerance and additional bandwidth. Servers use adapter cards called HBAs (Host Bus Adapters). Because of the fault-tolerant configuration, there are still active connections between server and storage if a fiber cable disconnects or a switch reboots.

Storage arrays are specially designed systems with a large number of disks or storage modules. A storage array effectively includes all of the types described in the “Local Storage” section, and one or more controllers that connect to both the drives and the FC SAN. Storage arrays support one or more types of RAID for data protection and various enterprise-grade features such as hot spare drives and hot-swappable drives.

Disk space is allocated to specific servers or groups of servers as desired in units referred to as LUNs (Logical Unit Numbers). When LUNs are presented to multiple ESXi hosts and formatted into shared VMware VMFS datastores, VMs can migrate from the host they are running on to another via “vMotion” since the storage is available to both hosts. VMs can also have their disk space relocated to another shared datastore via “Storage vMotion” as needed.

Storage Arrays are available in capacities up to multiple Petabytes in a single rack, and most can have more storage added non-disruptively. The recent trend has been away from spinning disk to SSD/Flash, and NVMe-capable products have become available more recently.

When choosing between these different storage solutions, you must weigh cost against the performance levels your workloads require. Compared to older storage technologies, they are all relatively expensive. However, the return on investment, flexibility, and fault tolerance have proven worth the cost premium in most enterprise use cases.

Advantages of FC SAN storage:

- Storage space can be allocated in various amounts to different servers as desired and presented to multiple servers simultaneously.

- VMs can vMotion to another compute host without moving storage when a LUN is presented to multiple hosts, and the datastore is visible to both.

- When configured for dual SAN Fabrics (best practice), it provides multiple paths to storage from hosts, and even if a switch or HBA port goes offline, access to storage is not lost.

- Allows backup traffic to avoid traversing the ethernet network (SAN-based vs. NBD).

- It has proven to be the most reliable and best-performing storage choice.

Disadvantages of FC SAN storage:

- It requires expensive special hardware (HBAs, FC switches, Storage Arrays).

- It is complex to configure and maintain FC SAN solutions.

“The CloudBolt team has been with us on this journey to self-service… This level of partnership and shared direction has enabled Home Depot to move faster, move further and continuously enhance our offerings to our Development Team customers.”

– Kevin Priest, The Home Depot

FCoE (Fibre Channel over Ethernet)

Overview

FCoE is not a storage type, it is a newer protocol that replaces the physical transport layer of Fibre Channel with Ethernet, encapsulating FC packets inside Ethernet packets. This protocol has enabled the SAN functionality to be carried over an existing Ethernet network (if all the end-to-end hardware supports FCoE), or for a company to purchase Ethernet hardware only instead of both Ethernet and Fibre Channel for a new installation.

Servers need a special adapter called a CNA (Converged Network Adapter) to support the encapsulation of packets. Many storage arrays support FCoE natively today. However, it is possible to have a protocol conversion take place inside switches that support this functionality to integrate FC storage arrays with an FCoE SAN. We’ll discuss that scenario in more detail in the “Hybrid” section below.

Ultimately, the appearance of the datastore backing storage to VMware is the same as in an FC SAN; LUNs are created on the storage array and mapped to servers as needed, and VMFS datastores are created. The essential difference is the transport method for all or part of the path the data follows. FCoE supports multipathing, and a fault-tolerant installation should include dual Ethernet networks.

Advantages of FCoE:

- Less expensive hardware compared to FC SANs.

- Management is similar to FC SAN, implying a small learning curve for those familiar with FC SAN.

- Similar to FC SAN, but uses CNA adapters on the host side which encapsulate FC packets inside Ethernet packets.

Disadvantages:

- Like FC SAN, it is complex to configure and maintain FCoE

- Existing storage arrays may not support FCoE, requiring protocol conversion.

- In most cases, FCoE is only a viable solution if the Ethernet network is 10 Gbps or higher.

iSCSI SAN

iSCSI, similar to FCoE, is not a storage type but another protocol. It encapsulates SCSI disk I/O commands into TCP/IP packets and can run over common Ethernet networks.

Advantages of iSCSI:

- Compared to FC SANs, less expensive hardware is required.

- Many storage arrays support iSCSI natively, with many similar administrative tasks as FC or FCoE.

- iSCSI (end-to-end) can be implemented quickly with a small learning curve.

Disadvantages of iSCSI:

- Requires some additional knowledge to set up the server-to-storage connections.

- Existing storage arrays may not support iSCSI.

- In most cases, iSCSI is only a viable solution if the Ethernet network is 10 Gbps or higher.

- There is performance overhead for TCP/IP encapsulation, but the installation of adapters with a TCP/IP Offload Engine (“TOE”) can help offset this by processing the encapsulation efficiently on the adapter.

Hybrid SAN

Hybrids of two or more of the protocols FCoE, iSCSI, and FC SAN exist in many installations. For example, a server blade chassis runs FCoE from the blade to a top-of-rack switch, which converts the I/O traffic to Fibre Channel and uplinks to an FC SAN. Some vendors offer switches with FCoE and FC capabilities and have built-in logic to convert between protocols. iSCSI, as mentioned above, does not require special Ethernet switches but typically does not perform as well as either FC or FCoE.

For a new installation, a hybrid may not make sense. Committing to all FCoE — at least for high-performance production workloads — is usually a better choice. However, upgrading an existing FC SAN with multi-protocol switches and FCoE components could both help to extend the lifecycle of existing FC hardware and ease the transition to all FCoE.

Advantages of Hybrid SAN:

- Permits continued use of existing FC SAN equipment while adding FCoE and/or iSCSI capabilities.

- Hybrid FC/FCoE SAN functionality is already common, as mentioned. Converting more of the SAN to FCoE may decrease the complexity of the SAN.

- Allows for a gradual transition to FCoE and/or iSCSI instead of a “forklift upgrade”.

Disadvantages of Hybrid SAN:

- Increases the complexity of the SAN, which can make troubleshooting more difficult.

- While there may be special switches that are capable of performing a protocol conversion from iSCSI to FC or FCoE, this type of hybrid may not be a viable solution in most cases.

Software-Defined VSAN

VMware VSAN (Virtual SAN) is a licensed software-defined storage product. It is designed to provide shared access to the servers’ local disk described in the first section. To reiterate, without VSAN the local disk is only accessible by the ESXi host it resides on. What VSAN does in effect is aggregate the local disks from the hosts in a (usually Hyper-Converged) host cluster to form one large VSAN datastore. The storage traffic, in this case, is traversing the Ethernet network between ESXi hosts, hence fast networking for this function is required. VMware provides a wealth of information on VSAN here.

There are many hardware and software requirements to implement VSAN. Unless it is a pre-configured solution purchased from a VMware Global Partner or the VMware “ReadyNode” configurator is used, ensuring these requirements are met can be difficult

Sizing a VSAN-enabled cluster properly for fault tolerance is a critical aspect of this process. If a host crashes or is removed for maintenance in a “conventional” cluster with shared SAN storage, there is no change to the storage. However, with VSAN each host is a building block of the VSAN datastore and will decrease capacity

Advantages of VSAN storage:

- Utilizes local disk space on servers that might otherwise be unused.

- Removes the need for external SAN hardware and networking.

- Is managed completely from within vCenter.

Disadvantages of VSAN storage:

- Software and hardware model and version requirements are restrictive.

- Requires an additional license.

VSAN Datastore Example:

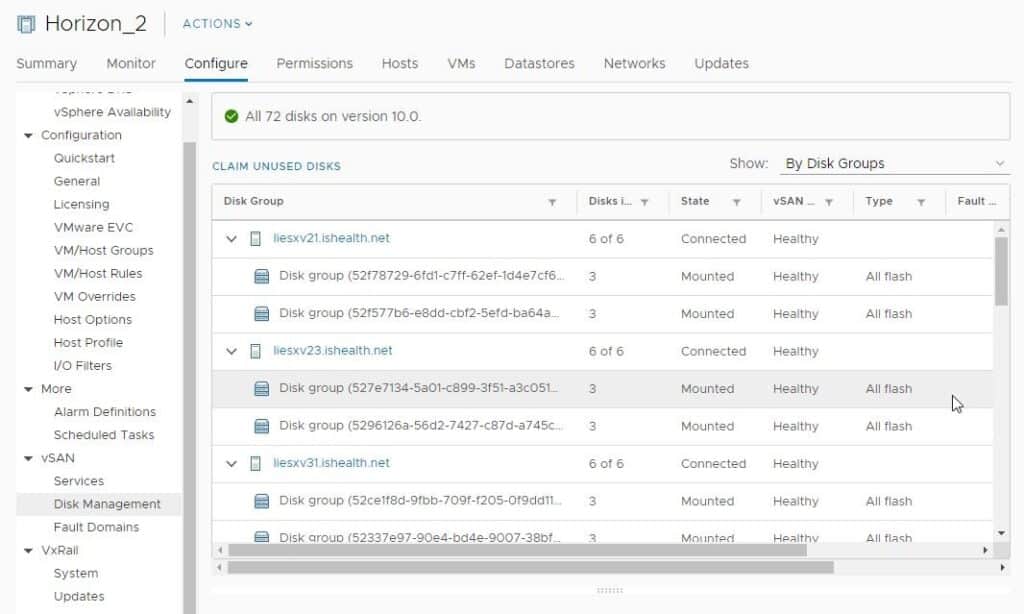

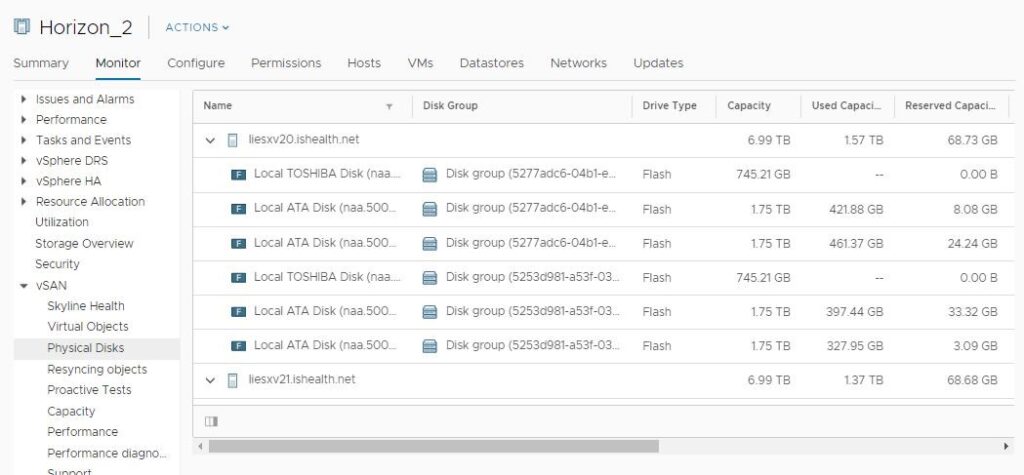

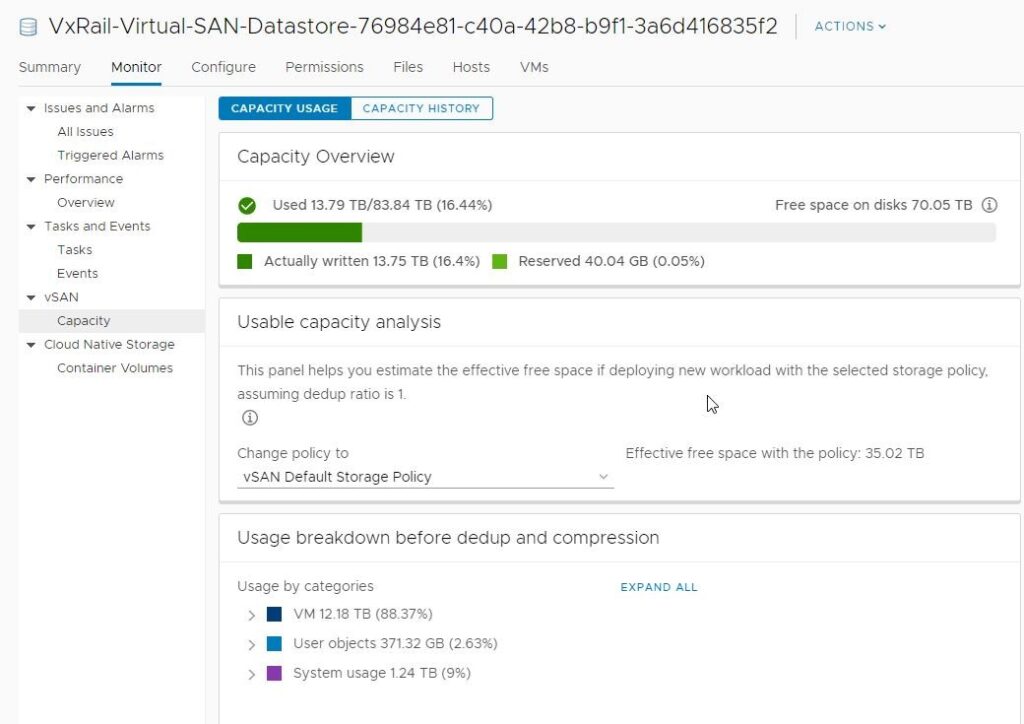

The first screenshot shows the disk groups in a VSAN datastore, and the second shows the actual physical disk layout on one of the hosts. The third shows the combined datastore capacity with 12 Dell/EMC VxRail hosts:

NFS File-Based Network Attached Storage (NAS)

Another type of storage supported by VMware (since ESX 3) is the Network File System (NFS). NFS was developed for the UNIX operating system as a method of sharing files over a network. The NFS Server manages the file system (which is typically an Enterprise Storage Array that supports NFS, sometimes called a “Filer”), as are other aspects such as accessibility and permissions.

NFS datastores for VMware are provisioned and “exported” to servers similarly to those used for server or end-user shared drives. Since they are file-based, there is no VMFS formatting required.

Advantages of NFS storage:

- Uses existing Ethernet and TCP/IP infrastructure.

- Provisioning and maintaining the shares is relatively easy compared to some other options discussed.

Disadvantages of NFS storage:

- There is a slight CPU utilization increase on ESXi hosts using NFS datastores.

- Unless NFSv4.1 is used on all devices, fault tolerance for transport must be provided by network redundancy.

vVols

Yet another type of VM storage, vVols are object-based entities that are accessed and managed by API calls using VASA (vCenter through vSphere APIs for Storage Awareness). Storage is allocated on a per-VM basis. The physical storage can be either an FC, FCoE or iSCSI storage array or NAS Filer with built-in support for vVols. The transport can be either FC, FCoE, or Ethernet, and is referred to as the PE (Protocol Endpoint). API calls occur over TCP/IP networks (“Out of Band”). vVols require that the ESXi hosts have HBAs with drivers that support vVols.

There are many considerations when looking at the option of implementing vVols. We recommend you consult the VMware documentation on vVols for a complete understanding of the complexities.

Advantages of vVols:

- Uses a more “modern” object-based approach to VMware storage.

- Puts more storage control into the hands of the vCenter administrator.

Disadvantages of vVols:

- vVols have a restrictive set of capability and compatibility requirements.

- Reliance on APIs and layers of abstraction adds complexity and dependencies to the environment.

Datastore Best Practices

Sizing and Capacity Planning

In general, it is better to provision multiple smaller datastores than one large datastore. Spreading the I/O workload across multiple datastores can provide better performance in most cases. That isn’t true if all of the datastores are provisioned from the same disk pool on the same storage array. However, even in this case a large pool of disks can handle a large I/O load. There is no “one size fits all” answer to this question; the environment and workloads must drive the decision.

For capacity, VMware’s best practice is that a minimum of 20% free space is available on a datastore to allow for growth and other operations such as Snapshots. If VM Snapshots are used while backing up VMs, space will be consumed while the original data is frozen and changes are being written to the snapshot file(s). The key takeaway is: “free” space on a datastore is not “wasted” space; it is necessary.

Fault Tolerance

One reason for the implementation of many options such as dual FC Fabrics, dual or meshed networks, multiple network interface cards (NICs) or HBAs on servers, RAID protection and hot-swappable disks, to name a few, is fault tolerance. No one wants to be the one who has to explain to the boss why a failed drive or a cut fiber optic cable took down one server, let alone possibly hundreds of them.

Having a VMware host cluster with automated DRS, adequate compute or VSAN capacity to survive at least one host failure without an outage, or the reboot of an Ethernet or FC switch cutting off access to storage, will undoubtedly permit the vCenter administrator to sleep better at night. Data protection and uptime for VMs performing critical business processes should be the main drivers behind fault tolerance choices.

Datastore Clusters and Storage DRS:

While datastores are recommended to be presented to a single Host Cluster in vCenter, it is not a requirement. A “Datastore Cluster” is a logical entity that groups a set of datastores together to enable functionality and policies that have operational benefits.

Similar to the DRS (Distributed Resource Scheduler) for balancing the compute load on ESXi hosts, is “Storage DRS”. Storage DRS can automatically perform VM data relocation to load balance space utilization and I/O latency between the datastores in the Datastore Cluster. Using Datastore Clusters and Storage DRS is a choice that some administrators choose to use, while others prefer to manage these tasks manually. The decision will depend on the needs of the organization.

Datastore Creation Process

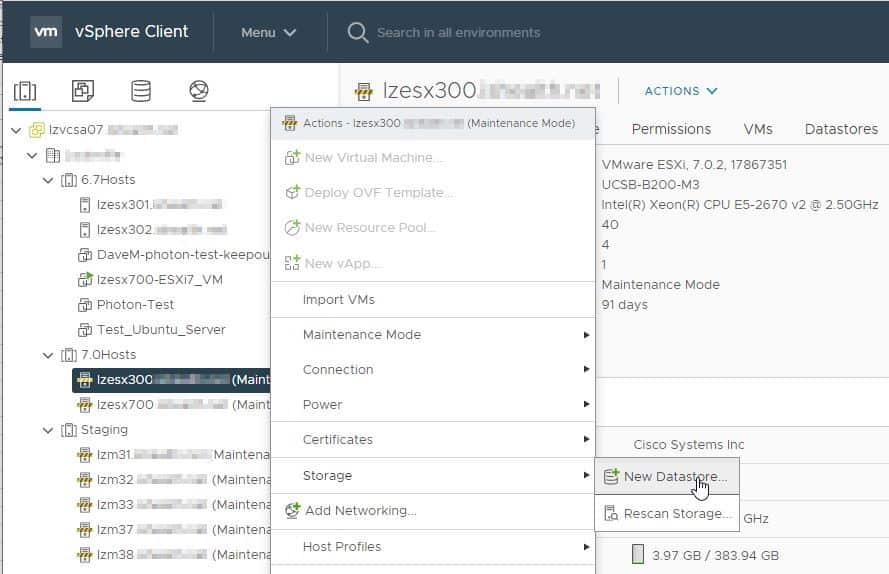

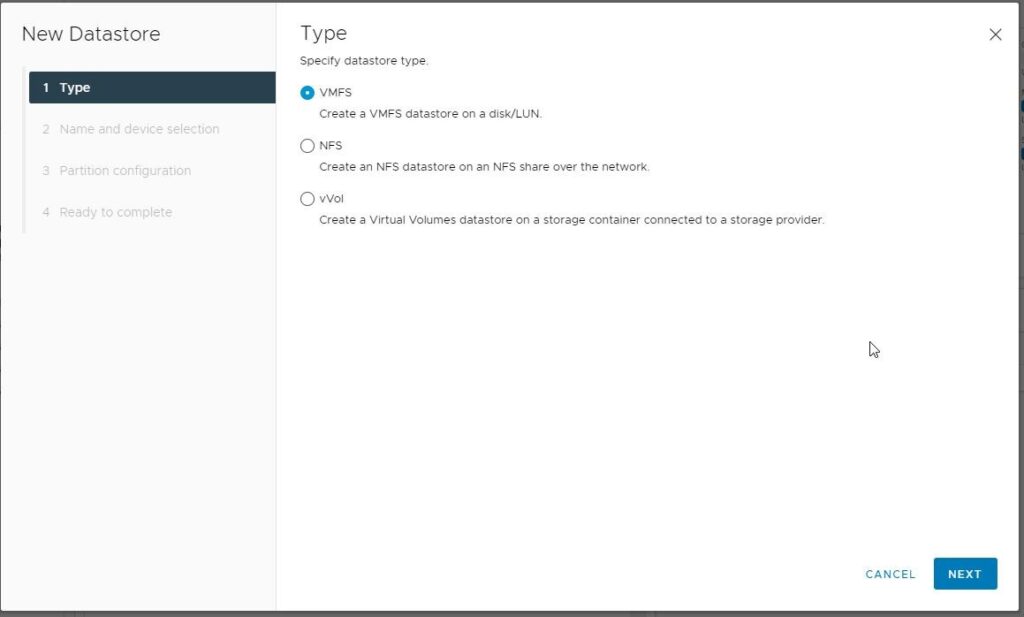

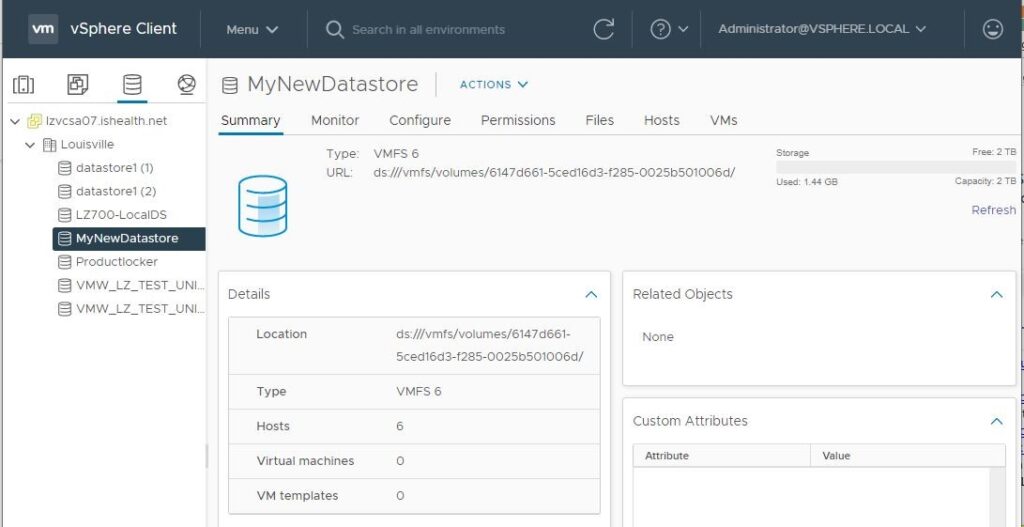

After presenting storage of one of the aforementioned types, the vCenter administrator only needs to perform several steps to create and use a datastore. In the case of a VMFS datastore, the process involves right-clicking on one of the ESXi hosts the LUN has been presented to and selecting “Storage | New Datastore”.

This invokes the following dialog:

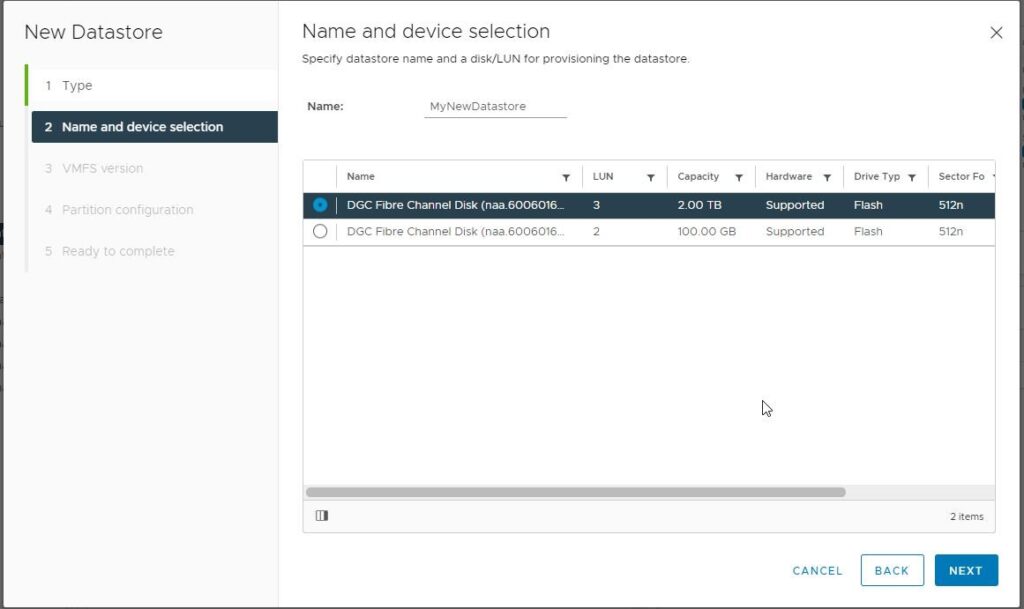

Clicking “NEXT” brings up this dialog, where the administrator enters a name for the datastore and selects the correct LUN/device:

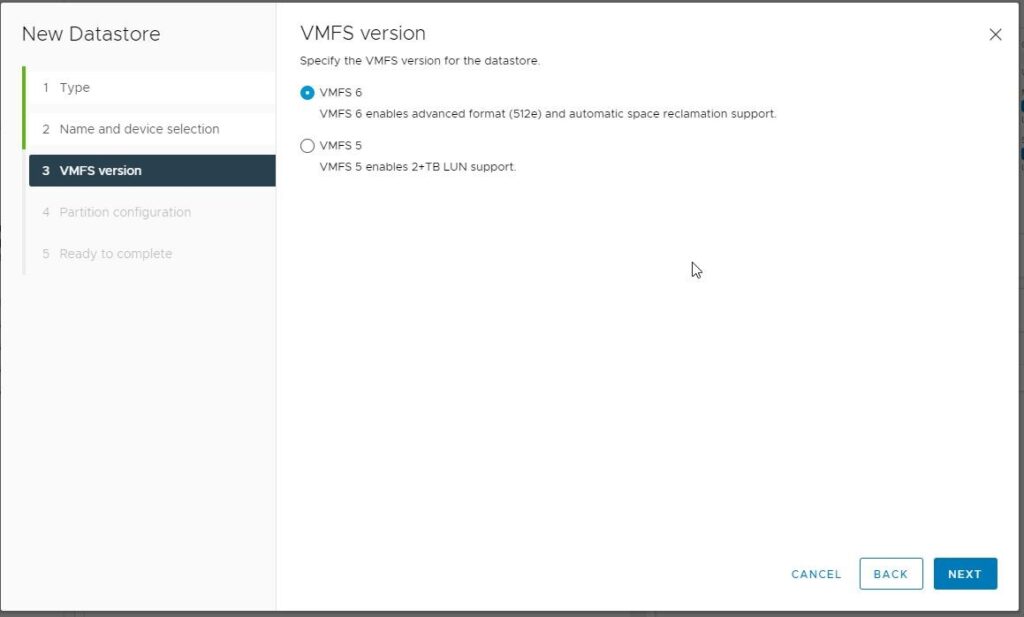

After clicking “NEXT”, the VMFS version is selected: [NOTE: VMFS 6 has enhancements for space recovery for deleted data on flash/SSD drives, and is the recommended choice]

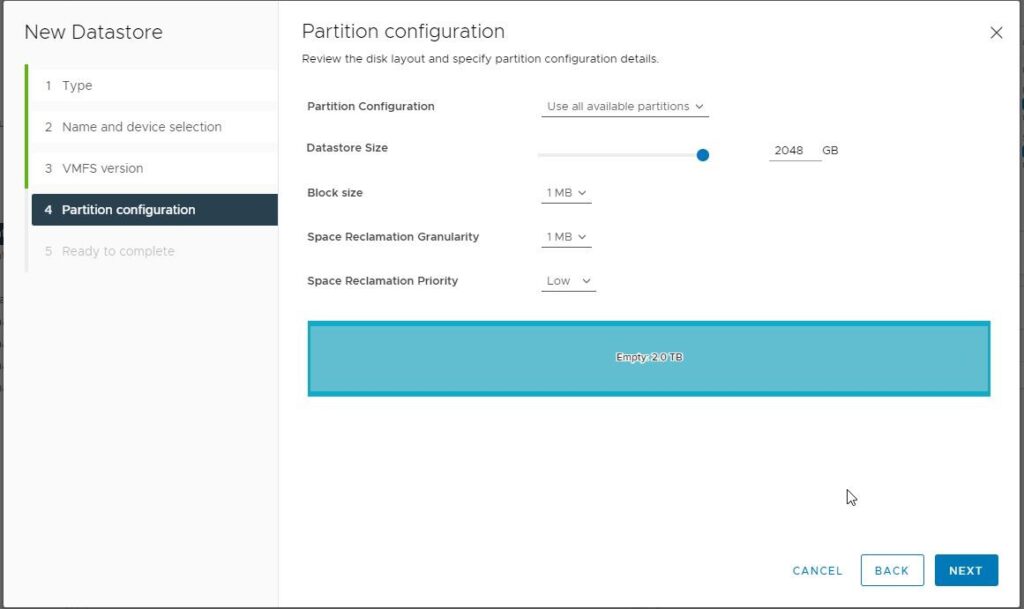

Next, we choose a partition size. Most common use cases should use the entire partition:

After clicking “NEXT” here, you will be presented with a summary of the operation:

After clicking “FINISH”, there will be a short wating period while ESXi formats the datastore and vCenter invokes a storage rescan on all ESXi hosts the device is presented to. Then, the new datastore will appear in the datastore list.

Conclusion

As you can see, there are many options to understand and many choices to be made when deciding which approach to VMware Storage is best for your organization. Of course, the purchase decisions must be deemed necessary and cost-effective by those who authorize the purchases and pay the invoices. Understanding the options, their advantages and disadvantages, and the implications of the choices will enable the vCenter administrator to advocate for the best choice to maintain uptime for the business and protect critical data.

Related Blogs

The New FinOps Paradigm: Maximizing Cloud ROI

Featuring guest presenter Tracy Woo, Principal Analyst at Forrester Research In a world where 98% of enterprises are embracing FinOps,…

VMware Migration – Evaluating your Options

Near the end of 2023, millions of users waited with abated breath to see if Broadcom’s $69 billion acquisition of…