VMware Raw Device Mapping (RDM) is a method of presenting storage to a virtual machine (VM) in VMware vSphere that enables many features of raw Small Computer Systems Interface (SCSI) devices. Many of these raw SCSI features are not available with virtual disk files (VMDKs). There are a variety of scenarios where using RDM devices is advantageous or even required. However, there are some disadvantages too, and it is not an ideal choice for all VMware environments.

To help you understand the pros and cons of VMware RDM, in this article, we’ll take a closer look at what it is, how it works, and popular use cases.

Types of VM storage

Typically, VMs use storage that the VMware ESXi host virtualizes. This storage consists of virtual disk or “VMDK” (or “.vmdk”) files. These files are stored on VMware Datastores — storage of various supported types such as SAN or NFS volumes — that are presented to the ESXi hosts and formatted using the VMFS filesystem.

RDM uses a file with the .vmdk extension but is a “proxy” file containing only mapping information. The “proxy file” points to a raw physical Storage Area Network (SAN) Logical Unit Number (LUN) storage device.

Two Modes of RDM Storage

Before discussing the use cases for RDM, there are two distinct modes of RDM supported by vSphere, which have some common characteristics and significant differences.

- Physical Mode: The ESXi hypervisor VMkernel passes all SCSI commands from the VM through to the physical SAN LUN, except for the “REPORT LUNs” command.

- Virtual Mode: The mapped device is fully virtualized. The “real” hardware characteristics are abstracted from the VM. The VMkernal only sends READ and WRITE to the mapped device.

As we explore the use cases for RDM storage below, the differences between these modes and when you should use them will become clearer.

Why Use RDM?

The “conventional” storage configuration used in vSphere consists of a supported storage type that is available to the ESXi hosts, such as a SAN LUN, NFS volume, or even storage that is local to the physical ESXi host, formatted as VMFS datastores. The virtual “disks” used by a VM are actually files that reside on one or more of these datastores.

The VM’s operating system (OS) does “see” the physical storage device that hosts the .vmdk files. The storage devices appear as disks in the same way that physical disks are presented to an OS on a physical server.

For most VMs, this is an adequate arrangement. However, there are scenarios where a VM needs access to the underlying LUN using SCSI commands and other features that are not available using standard VMDK files. RDM can help in these cases. Specific use cases for RDM include:

- Multi-server clustering – most clustering solutions require common access to at least one storage device.

- SAN Aware Applications – such as management software and agents – some tools of this type require access to a SCSI LUN.

- Rapid physical-to-virtual server conversion – when one or more large LUNs is involved.

Advantages of Using RDM

Without RDM, those configurations are not feasible using VMs and require physical servers. However, VMs are almost always more cost-effective and offer many fault tolerance and backup/recovery features not available with physical servers.

For example, RDM devices can provide:

- A method to share a storage device between VMs or VM and Physical servers

- Direct SCSI command access to a LUN from a VM

- Advanced File Locking for data protection

- Quick migration of a LUN from a physical server to a VM

Disadvantages of Using RDM

As with any technology, there are tradeoffs involved with RDM. For VMware environments, there are several disadvantages or limitations when using RDM storage, including:

- VM Snapshots and Clones are not possible when using Physical Mode RDM

- Not all SAN storage arrays support the requirements for mapping LUNs as RDMs

- RDM is not supported on NFS volumes or using Direct-Attached block devices

- RDM cannot be used for a disk partition, it must use the entire LUN

- It adds more complexity to a vSphere environment

💡Pro-tip: Use the VMware Compatibility Matrix. For any combination of physical hardware and VMware software components, including RDM use cases, we recommend using the VMware Compatibility Matrix to verify support of the overall configuration:

How Does RDM Work?

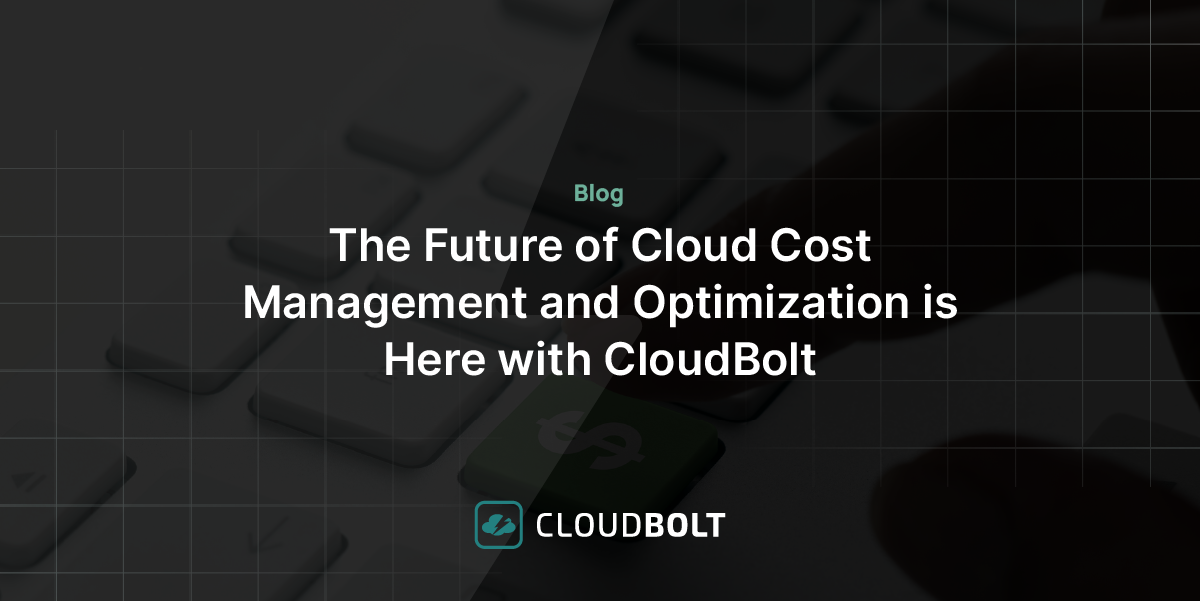

While it uses some of the same methods as other technologies, VMware’s implementation of RDM is rather innovative. VMware RDM uses a ”proxy” VMDK virtual disk file to map metadata to the actual LUN. That means vSphere and ESXi are aware of the physical LUN but use the proxy file as an intermediary between the VM and the physical LUN.

At first, this all may be a bit difficult to understand because there are multiple levels of virtualization.

The VM OS sees a VMDK file as a “disk” device (which it is not, it is a file on a datastore), yet for RDMs the file is a “pass-through” redirector to the physical LUN.

“The CloudBolt team has been with us on this journey to self-service… This level of partnership and shared direction has enabled Home Depot to move faster, move further and continuously enhance our offerings to our Development Team customers.”

– Kevin Priest, The Home Depot

The diagram below simplifies flow of messaging with RDM:

Example RDM Use Cases

Now, let’s take a look at some examples of real-world RDM use cases.

Clustered Servers

There are many different clustering schemes and configurations. In most cases, one requirement is that all servers participating in the cluster have access to at least one shared storage resource. The purpose of these shared storage resources is: the database or file system should remain available even if one of the servers crashes or is removed for maintenance.

A second purpose for a shared storage device is for what, in many cluster configurations, is referred to as a “quorum” disk or “heartbeat” disk. Each server or cluster “node” has read/write access concurrently to the quorum disk, and information is updated periodically as to the state of the cluster nodes. If a node goes down unexpectedly, the other nodes can react by taking over the failed node’s functionality.

In the past, with physical servers, dual-attached SCSI devices or SAN LUNs that were mapped to multiple servers provided this functionality.

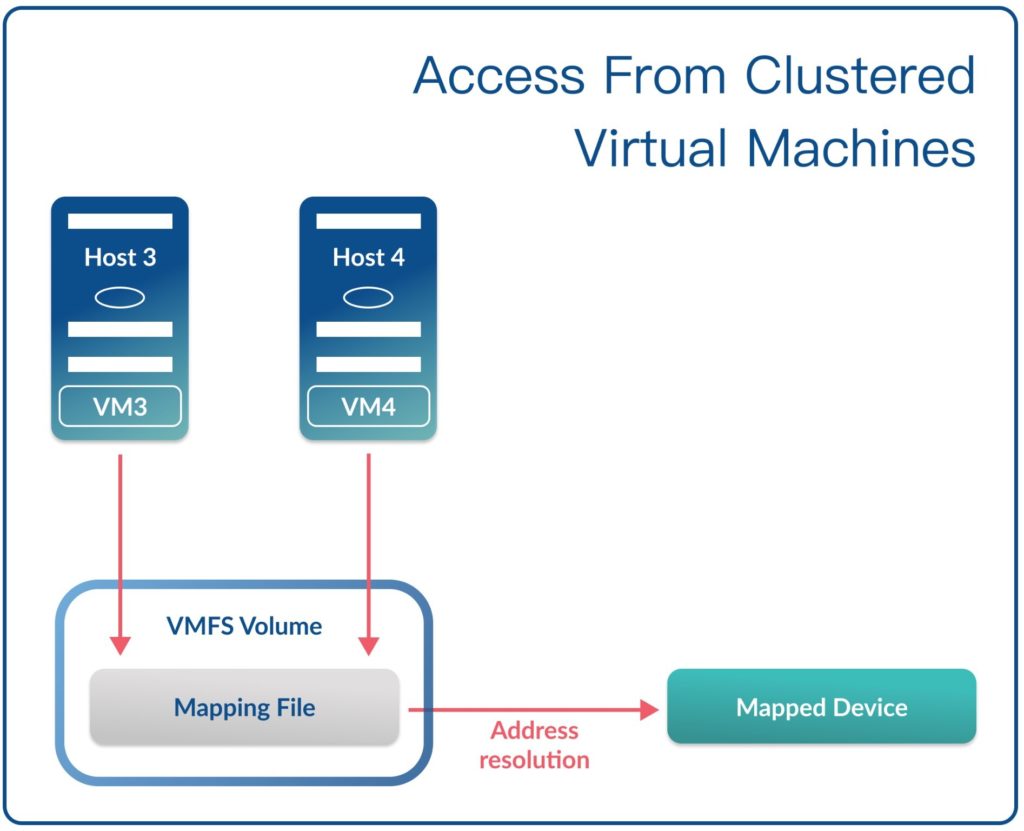

The SAN LUN configuration is still quite popular. Often, this is how VMware datastores are made available to multiple ESXi hosts. When we try to bring VMs into the mix, it gets a bit more complicated. RDM provides similar functionality to VMs.

When building clusters using all VMs, there are two popular configurations:

- Cluster-in-a-box (CIB) – In this configuration, all servers participating in the cluster reside on the same ESXi host. This is typically used for testing and development because the cluster will not survive the loss of the ESXi host without an outage.

- Cluster-across-boxes (CAB) – In this configuration, the servers can reside on different ESXi hosts. In this case, the RDM LUN(s) must be mapped to all ESXi hosts using the same LUN ID(s). This configuration is much more fault-tolerant.

You can use Virtual Mode RDM in both configurations, so VM snapshot and clone capabilities are available. Cluster RDM disks are mapped to all VM servers participating in the cluster.

- Physical-to-Virtual Clustering – Scenarios where a hybrid cluster containing both physical and virtual servers makes sense. For example, a powerful (and expensive) physical server might be a primary host for a high-performance database. However, the cost of having two or more similar servers can be prohibitive. Configuring one or more VMs as the fail-over targets if the primary server fails will at least provide fault tolerance to the database, if not the same performance level during a failover.

In the hybrid physical/virtual scenario, only Physical Mode RDM can be used. In this case, the physical host is presented with the SAN LUN(s) directly, while the VMs use the Physical Mode RDM pass-through as described above.

Physical to Virtual Migration

Imagine that you have a physical Microsoft Windows server with one or more very large SAN LUNs, formatted as individual disks and containing many Terabytes of data. You’ve been tasked with converting this server to a VM to retire aging hardware or for cost savings. The first thought might be to create a new VM with the same disk space, format the new virtual drives, then copy all the data over the network. Using RDMs could enable you to move to a VM faster and migrate the data later, or even leave it where it is.

Assuming that your SAN storage array supports it, you could map the same LUNs to the ESXi host(s) where the new VM will reside, then map these LUNs as RDMs to the new VM. You can then migrate from the old physical server to the new virtual server very quickly. Additionally, you can now move the data off RDMs and onto actual VMDK disks. You can perform this migration using host-based migration tools.

SAN-Aware Applications

Several examples of SAN-aware applications are Netapp SnapManager for Exchange/SQL or Dell/Equallogic VSS Writer. These applications typically have agent software that uses an Application Programming Interface (API) to communicate with the storage array, and needs to have direct access to LUNs for functionality such as creating snapshots. Using a VM to host these applications instead of dedicating physical servers can result in cost savings and a reduction in administration requirements.

Conclusion

VMware RDMs are a powerful feature that can extend the capabilities of VMs into use cases that required physical servers and direct physical SAN connectivity in the past. If your organization has a use case where RDM storage can allow you to use VMs instead of physical servers, the convenience and cost savings can be significant.

Related Blogs

The New FinOps Paradigm: Maximizing Cloud ROI

Featuring guest presenter Tracy Woo, Principal Analyst at Forrester Research In a world where 98% of enterprises are embracing FinOps,…