Balancing Performance and Budget: Strategies for Kubernetes Cost Optimization

Kubernetes, commanding a 92% market share as of 2023, stands as the undisputed leader in container orchestration. The platform is renowned for its scalability, resilience, and versatility, with integrations across various industries and development contributions from over 7500 companies. Escalating adoption has propelled Kubernetes cost optimization to the top of organizational agendas.

Resource management is a well-known challenge with Kubernetes. As clusters expand, they introduce complexities around resource management, leading to cost overruns and budgetary strains. A recent CNCF FinOps survey revealed that 68% of businesses see year-over-year increases in Kubernetes-related expenses, highlighting the necessity for more effective spending controls.

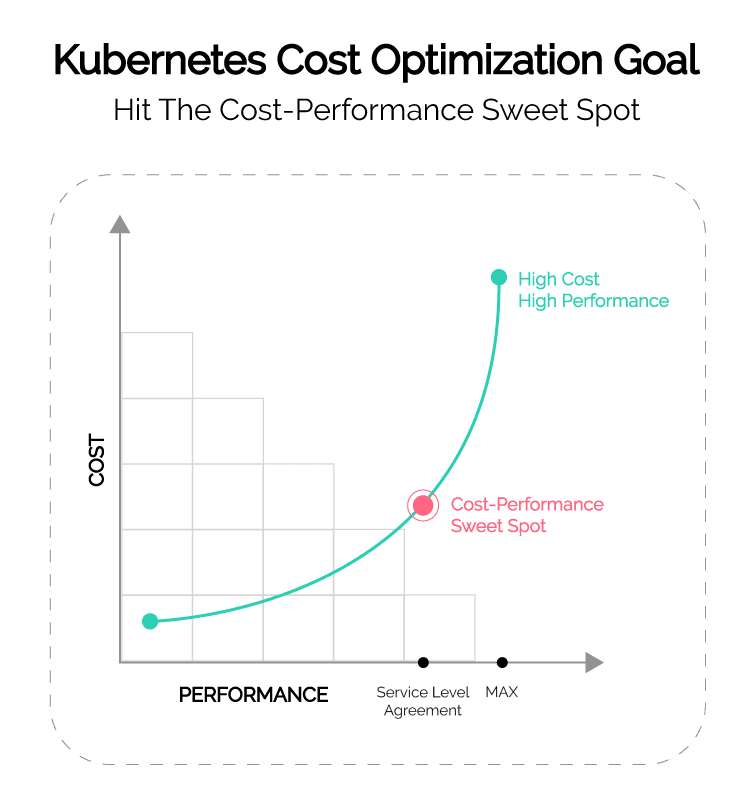

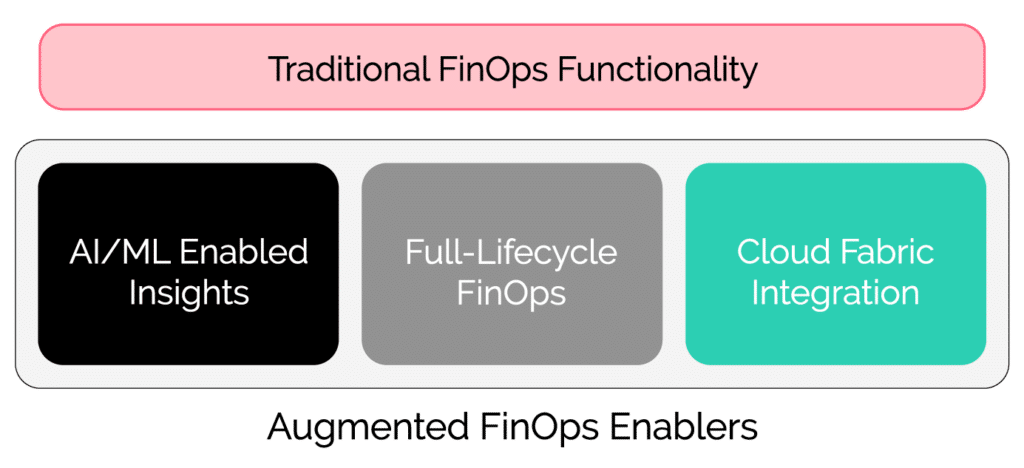

The need for Kubernetes cost optimization has given rise to Augmented FinOps—a modern approach that integrates traditional FinOps with data-driven analytics, artificial intelligence (AI), and automation. Companies can gain a granular understanding of their Kubernetes expenditures, identify inefficiencies, and make informed decisions that lead to the ‘sweet spot’ between high performance and affordability.

This article explores the intricacies of Kubernetes cost optimization, offering insights and best practices in Augmented FinOps and related tools to establish a more cost-effective and efficient Kubernetes environment.

Best practices in Kubernetes cost optimization

| Best Practices | Description |

|---|---|

| Right-size your Kubernetes nodes | Over and under-provisioning of nodes is a leading factor in unnecessary costs. Ensure that workloads have sufficient capacity but resources are not idle or overburdened. |

| Scale your clusters effectively | Clusters need elasticity so they can grow and shrink based on demand. However, incorrect configurations within the tools that support scaling can lead to significant cost increases. |

| Optimize storage use | Orphaned volumes within a cluster incur costs. Ensure you use all capabilities within Kubernetes to understand how your volumes are provisioned and if they’re attached correctly. |

| Leverage monitoring and logging | Monitoring and logging play a significant role in a cluster’s operation. Ensure you select the tool that makes the most sense for your organization. |

| Leverage the use of quotas | You can use resource quotas within namespaces to limit resource consumption if it does not adversely impact operations. |

| Leverage proprietary cloud resources like spot instances and reserved instances. | Most cloud providers offer monetary incentives for committing long-term. Explore these options to understand if they’re right for you. |

Main factors contributing to Kubernetes costs

Before jumping directly into the best practices, let’s identify how costs are comprised within Kubernetes. Several vital factors significantly impact overall expenses.

- Compute resources – Cloud instances like EC2/VM/GCP or standard physical servers are the backbone of any Kubernetes cluster, dictating the processing power required for running applications.

- Storage costs – Storage is crucial for data persistence and varies based on volume and access speed requirements.

- Network expenses – Though often overlooked, networking costs, including data transfers and bandwidth usage, can quickly escalate if not monitored closely.

The design of the Kubernetes cluster also plays a crucial role in determining costs. For instance, an on-premise setup might involve substantial upfront investment in hardware and maintenance. In contrast, cloud-based Kubernetes services offer pay-as-you-go models but can incur costs based on resource scaling and usage patterns. A deep understanding of your application’s needs is crucial to identifying the correct architectural and resource patterns you should follow. Only after building a solid foundation can we leverage the Kubernetes cost optimization best practices outlined below to enhance our bottom line further.

#1 Right-size your Kubernetes nodes

Let’s begin by identifying one of the most common challenges engineers face when managing a Kubernetes cluster – the right-sizing of nodes. Misconfigurations around size lead to either overprovisioning or underprovisioning. Underprovisioning nodes can result in insufficient resources for workloads—causing performance issues, increased latency, and potential downtime. Conversely, overprovisioning leads to unnecessary costs due to idle resources.

Having insight into how your nodes are utilized is pivotal in ensuring you are right-sizing your cluster’s processing power. Here are some tips to ensure you’re providing your cluster with what it needs without incurring unnecessary expenses.

Limits and requests

Rather than blanket resource allocation at the node level, you can use limits and requests, a Kubernetes feature operating at the pod level. It allows for more precise control over resource allocation, ensuring each pod receives the necessary resources while optimizing overall node utilization.

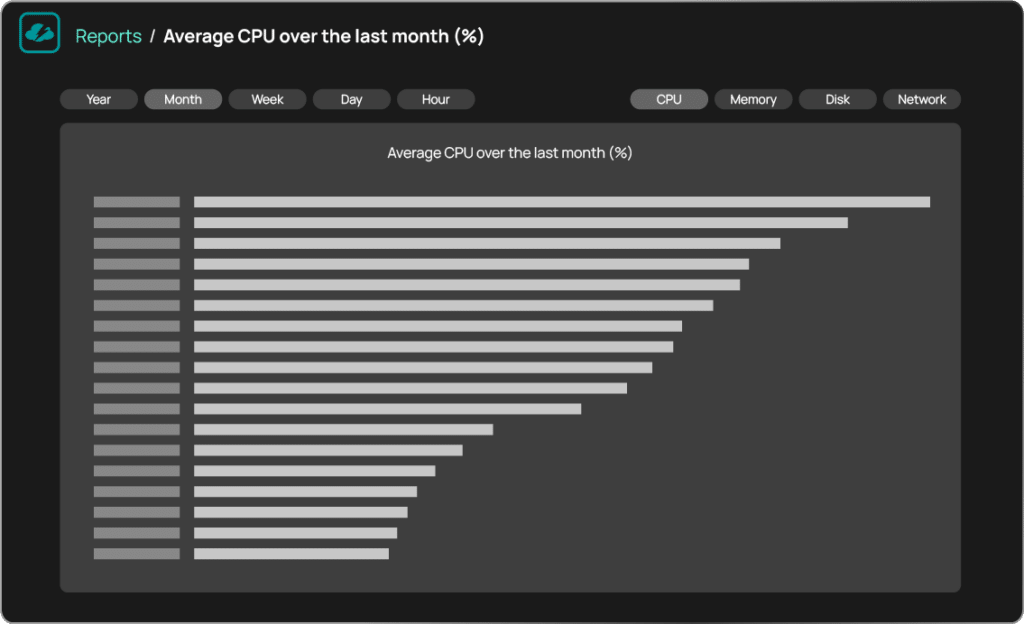

Regular performance monitoring

Implement a routine monitoring process to track nodes’ performance and historical resource usage. Assess CPU, memory, network bandwidth, and storage requirements for Kubernetes cost optimization analysis.

Use an augmented FinOps platform

You can use platforms like CloudBolt to obtain granular metrics on resource utilization within your cluster and use machine learning and artificial intelligence to recommend utilization rate improvements. Use this data to make informed decisions on optimizing your nodes’ size.

#2 Scale your clusters effectively

The ability of a cluster to scale effectively is another factor crucial to controlling costs. Scaling dynamically adjusts resources to meet the demands of applications. It ensures that workloads have sufficient resources to operate efficiently without incurring unnecessary costs from misconfigurations.

Tools like Vertical Pod Autoscaler (VPA) and Horizontal Pod Autoscaler (HPA) are essential. VPA adjusts a pod’s CPU and memory limits to optimize them for current usage patterns. Similarly, HPA scales the number of pod replicas based on observed CPU utilization or other selected metrics. Tools like Cluster Autoscaler or Karpenter also allow node-based scaling for Kubernetes environments deployed within the cloud. They automatically adjust the cluster size, adding or removing nodes based on the needs of the workloads.

As an administrator, ensure that you adhere to the following best practices.

Determine the right tool

There are many tools on the market, either native to Kubernetes or from third parties, that handle scaling. Overcomplicating your scaling process can lead to additional, more complex issues arising.

Implement resource-based scaling policies

Use metrics like CPU and memory usage to drive scaling decisions, ensuring that resources are allocated based on actual demand rather than static thresholds.

Integrate augmented FinOps for strategic scaling

Augmented FinOps plays another key role in scaling. Some platforms, like CloudBolt, have their own built-in autoscaling features, allowing clients to leverage this essential component right out of the box for their Kubernetes cost optimization requirements.

#3 Optimize storage use

Kubernetes offers various features like storage classes, Persistent Volumes Claims (PVCs), and automated volume resizing to control storage and associated costs.

- Storage classes allow administrators to define different storage, each with specific characteristics and costs.

- PVCs are requests for storage by users, which, when used effectively, can prevent over-provisioning storage resources.

- Automated volume resizing is also a valuable feature, allowing volumes to grow or shrink based on the application’s needs, ensuring efficient usage of storage resources.

You can use them to enable optimal allocation based on the needs of each application. Allocate storage resources efficiently, matching the specific needs of different applications and workloads. You can also periodically identify and remove orphaned or unattached volumes to prevent unnecessary costs.

Augmented FinOps also provides unique benefits in Kubernetes storage optimization. Organizations can use a platform like CloudBolt, to quickly identify unused storage or orphaned volumes that are needlessly incurring costs.

#4 Leverage monitoring and logging

Like with any other tool or platform, monitoring and obtaining logs is indispensable to maintaining Kubernetes’ health and performance. Tools like Prometheus, often used with Grafana, have gained popularity due to their powerful monitoring capabilities and intuitive metrics visualization. Prometheus provides detailed insights into cluster performance and storing metric data, while Grafana allows the creation of informative, customizable dashboards to display this data in an accessible format.

Cloud-specific tools like AWS CloudWatch offer integrated solutions tailored to their respective cloud environments. These tools provide basic monitoring and logging and advanced features like automated responses to specific events.

Here are a few best practices that can keep you up to date on what’s going on with the environment:

Monitor your clusters

Quite a few factors go into determining the right kind of monitoring for your environment. Things like cost, features, and architecture are essential to consider. For example, native cloud tools like CloudWatch or Azure Monitor have great features but are expensive. Prometheus provides a ton of functionality but can be more complex than needed. Understand what you need before deciding what tool to use.

Use distributed tracing

For more in-depth analysis, employ distributed tracing tools to track and visualize requests as they flow through the microservices within your cluster. It helps pinpoint specific issues within a complex system of interconnected services, enabling more effective troubleshooting and optimization.

Implement enhanced FinOps solutions

With comprehensive platforms like CloudBolt, users can obtain a single pane of glass view into the state of their clusters. CloudBolt makes monitoring significantly more manageable and streamlined, allowing holistic viewership, along with seamless integrations, of the state of the environment.

#5 Leverage quotas within namespaces

Applying quotas to namespaces is a key practice for managing resource allocation and maintaining cluster efficiency. By setting quotas, administrators can limit the amount of resources like CPU, memory, and storage that each namespace consumes, preventing any single namespace from overusing cluster resources.

Quotas also play a vital role in cost management. They help control the overall expenditure on cluster resources by capping the resources that each namespace consumes. This is particularly important in cloud environments where resource usage directly translates to costs.

Continuously monitor resource usage and adjust quotas to reflect changes in workload requirements or optimize efficiency. As is the case with right-sizing nodes—limits and requests can also help when used in conjunction with quotas. You can combine the two to ensure a more granular and effective resource management strategy within each namespace.

CloudBolt’s single pane of glass allows users to break down costs and usage by namespace, letting organizations get granular insight into the usefulness of quotas. By seeing how much utilization occurs within a namespace, you can make an educated decision on the effectiveness of your quotas.

#6 Make use of cloud resources

CNCF estimates that only 22% of Kubernetes users run a cluster on-premise—the majority of clusters run on the cloud. Understanding what tools are native to the cloud platform hosting your cluster is imperative to Kubernetes cost optimization.

Each platform has its different proprietary tools that you can use to cut costs. Take time to understand what they are, what they do, and if they’re right for you. Most platforms also have a tool to help set budgets around certain services, allowing you to control costs and be alerted when they surpass a defined threshold.

One of the most significant advantages of utilizing a platform like CloudBolt is centralizing all pertinent data into one place. While using cloud-native tools is essential, hybrid and multi-cloud environments have to be able to consolidate data into one platform.

Featuring guest presenter Tracy Woo, Principal Analyst at Forrester Research

Conclusion

Striking a balance between ideal performance and cost efficiency is the prime objective of Kubernetes cost optimization. FinOps technologies promise to bring even greater depth and precision to financial operations in cloud environments. The future of augmented FinOps holds inspiring prospects with advancements like feedback loops and conversational AI.

Embracing solutions like CloudBolt can be a significant step towards mastering Kubernetes cost optimization and propelling your infrastructure into a future of enhanced efficiency and innovation. CloudBolt effectively integrates the principles of augmented FinOps and aligns with the best practices required to optimize Kubernetes environments. Don’t get left behind – schedule a demo today and see if CloudBolt is right for you!

Related Blogs

The New FinOps Paradigm: Maximizing Cloud ROI

Featuring guest presenter Tracy Woo, Principal Analyst at Forrester Research In a world where 98% of enterprises are embracing FinOps,…